A quick guide to Sentiment Analysis

Sentiment Analysis is a subfield of Natural Language Processing (NLP) that aims to decipher the subjective information in a piece of text to understand its polarity (positive, negative, or neutral) and subjectivity. In this article, we delve into advanced techniques and libraries used in sentiment analysis, focusing on Python due to its versatility in data science and rich ecosystem of libraries.

Techniques for Sentiment Analysis

Sentiment analysis typically involves several stages: data preprocessing, feature extraction, and model building. The complexity of these stages varies significantly depending on the technique used. They range from simple rule-based methods to sophisticated deep learning techniques.

Rule-based methods, like Vader Sentiment Analysis, are relatively simple, relying on a set of manually curated rules and lexicons (a collection of known words and their sentiments). However, while easy to implement, they fail to capture context and nuances.

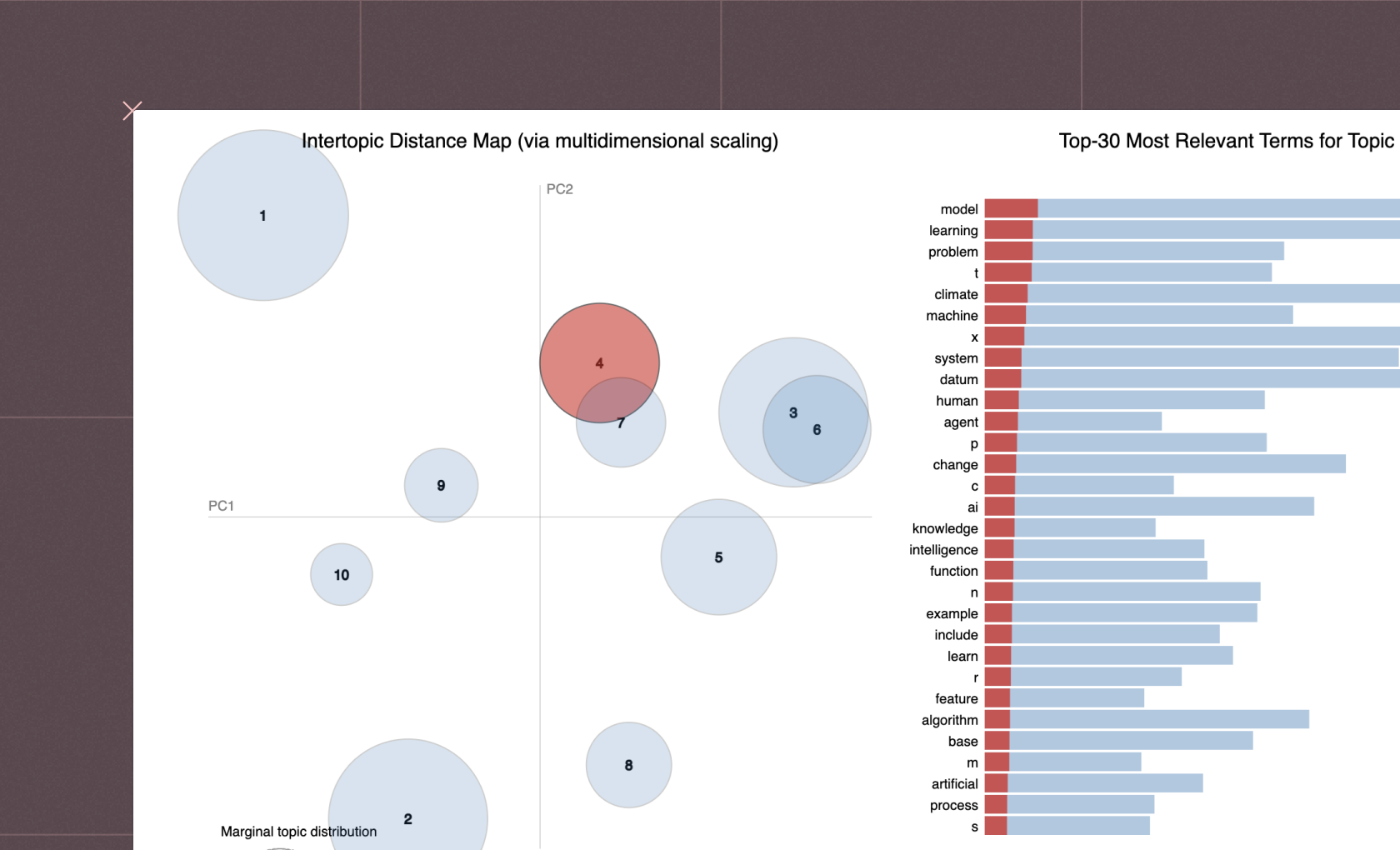

Machine Learning (ML) techniques offer more accuracy by training models on labeled data, using algorithms like Naive Bayes, Logistic Regression, and SVMs. Feature extraction is a crucial step here, where raw text is transformed into a feature vector using techniques like Bag of Words or TF-IDF.

Deep Learning techniques offer the highest level of complexity and accuracy, allowing the models to learn abstract features from raw data. Recurrent Neural Networks (RNNs), and more specifically, Long Short-Term Memory (LSTM) networks and transformers like BERT, have been successful due to their ability to capture the sequential nature of the text data.

Libraries for Sentiment Analysis

Python provides an array of libraries that cater to different sentiment analysis techniques:

NLTK (Natural Language Toolkit): A comprehensive library for NLP, including sentiment analysis. It provides functionalities for data preprocessing and features the Vader Sentiment Analysis tool, a lexicon and rule-based method.

Scikit-learn: An essential library for ML in Python. It offers a wide range of algorithms and utilities for preprocessing, feature extraction, and model evaluation.

Keras and TensorFlow: Deep learning libraries that allow the design, training, and deployment of neural networks. Both support the creation of advanced models like RNNs, LSTMs, and even pre-trained models like BERT for sentiment analysis.

SpaCy and Hugging Face Transformers: SpaCy is a powerful NLP library, and Transformers provide state-of-the-art pre-trained models like BERT, GPT-2, and T5. When used in conjunction, they provide an advanced ecosystem for deep learning-based sentiment analysis.

TextBlob: It is built on NLTK and another package called Pattern. It provides a simple API for common NLP tasks including sentiment analysis.

Understanding these techniques and their corresponding libraries is essential to perform sentiment analysis effectively. It is also crucial to recognize that while advanced techniques offer greater accuracy, they come with a higher complexity and computational cost. Therefore, the choice of the technique often depends on the specific task, available resources, and the level of accuracy required.

See what else Hex can do

Discover how other data scientists and analysts use Hex for everything from dashboards to deep dives.

FAQ

Evaluating a sentiment analysis project involves using metrics like precision, recall, F1-score, and accuracy. These metrics can be calculated by comparing the model's predictions with the actual labels on a test dataset. In addition, you could use a confusion matrix to understand the type and number of errors made by the model. For continuous sentiment scores, metrics like Mean Absolute Error (MAE) or Root Mean Square Error (RMSE) can be used. Regular evaluation and tweaking of your model for better performance are key parts of any sentiment analysis project.

Sentiment analysis is a component of text mining, which refers to the process of deriving high-quality information from text. While text mining may involve extracting various types of information and patterns from text data, sentiment analysis specifically focuses on identifying and extracting subjective information to understand the sentiment expressed.

Generally, sentiment analysis works by processing textual data to extract subjective information, i.e., sentiments. This could involve simple techniques like determining the presence of positive or negative words, or complex techniques like using machine learning algorithms to classify the sentiment based on training on a labeled dataset.

Aspect-based sentiment analysis involves determining sentiment regarding specific aspects in the text. Training a classifier involves collecting labeled data for each aspect, preprocessing the text, extracting features, and then training a suitable machine learning model, such as Naive Bayes, SVM, or even a deep learning model.

Handling negations in sentiment analysis can be tricky as it involves understanding the context. Techniques include using bigrams or trigrams that keep negation words with the words they modify, or using dependency parsing to capture the relationships between words. Advanced deep learning models like LSTMs or transformers can also effectively handle negations.

There are multiple ways to retrieve datasets for sentiment analysis. Many pre-labeled datasets are available in repositories like Kaggle or UCI Machine Learning Repository. Alternatively, you can scrape data from social media or other websites using Python libraries like Beautiful Soup or Scrapy, or APIs provided by the platforms.

To perform sentiment analysis on Instagram, you first need to scrape the data, which might involve posts, comments, or captions using Instagram's API or other Python libraries like Instaloader. After cleaning and preprocessing the data, you can use sentiment analysis libraries like TextBlob, Vader, or even machine learning models to derive sentiments.

SpaCy can be used for text preprocessing tasks in sentiment analysis, including tokenization, lemmatization, and part-of-speech tagging. However, SpaCy does not inherently provide sentiment scores. For actual sentiment analysis, you can use additional libraries like TextBlob, or train your own classifier using machine learning techniques.

To implement a sentiment analysis project, start by clearly defining your problem statement and objectives. Collect and preprocess the relevant text data, then extract meaningful features. Choose the appropriate sentiment analysis method, ranging from rule-based methods to machine learning or deep learning models, then train and evaluate your model.

Weka, a suite of machine learning tools, can be used for sentiment analysis. After installing Weka, you need to load your dataset, preprocess it using the filters available in Weka, then choose and train a classifier (like Naive Bayes or SVM), and finally test the model on your testing data.

Performing sentiment analysis effectively involves multiple steps: quality data collection, thorough preprocessing, appropriate feature extraction, and selection of the right model based on your task. Regular evaluation and tweaking of your model are also essential. For complex tasks, you might need to use more advanced techniques such as deep learning models.

Performing sentiment analysis in non-English languages like Tamil requires access to Tamil language text data and a sentiment lexicon in Tamil. Use Python's NLTK library for text preprocessing (such as tokenization), but ensure the steps are applicable to Tamil language. For more accurate models, you might need Tamil language pre-trained models or adapt existing models using Transfer Learning.

Start by understanding the basics of NLP and Python programming. Online courses, tutorials, and books on NLP, machine learning, and sentiment analysis are good resources. Work on practical projects, such as analyzing social media sentiment or customer reviews, to apply what you learn.

For email sentiment analysis, start with thorough preprocessing to remove irrelevant information like email headers, footers, and signatures. Then, use Python libraries like TextBlob for simple sentiment analysis or train a machine learning model if the task requires more accuracy. Remember to handle negations and contextual sentiments.

To improve your sentiment analysis code, ensure your data is thoroughly cleaned and preprocessed. Experiment with various feature extraction methods and models. For complex tasks, consider using advanced models like LSTMs or transformers. Also, continuously evaluate your model's performance and tweak parameters for optimal results.

Document-level sentiment analysis refers to understanding the sentiment of a whole document rather than individual sentences or words. This can be done by processing the entire document as a single entity using libraries like TextBlob or NLTK, or by using deep learning models like LSTMs or BERT which consider the entire context for classification.

Creating a sentiment analysis dataset involves collecting text data and labeling it with sentiments. You can scrape data from social media platforms, customer reviews, etc., using Python libraries like Beautiful Soup or Scrapy. After data collection, manually annotate the data for sentiments, or use semi-supervised techniques to help with this task.

First, clearly define your project goals. Identify the data you'll be analyzing, whether it's tweets, customer reviews, or some other text data. Then, decide on the techniques and tools you'll use, like Python and its various libraries. After that, preprocess your data, build your sentiment analysis model, and evaluate its performance.

Start by understanding the basics of natural language processing and text preprocessing. Then learn about machine learning techniques for text classification and dive into libraries like NLTK, TextBlob, and scikit-learn. For advanced understanding, study deep learning models like RNNs, LSTMs, and transformer models like BERT.

First, you need to install necessary libraries like NLTK and TextBlob. Then you load your text data, preprocess it, and use the `.sentiment` method from TextBlob to perform sentiment analysis. For complex tasks, you may need to train machine learning or deep learning models using libraries like scikit-learn or TensorFlow.

You can use Python libraries such as NLTK, TextBlob, and scikit-learn for sentiment analysis. These libraries allow you to preprocess text data, extract features, and implement machine learning models or use rule-based sentiment analysis methods.

Can't find your answer here? Get in touch.